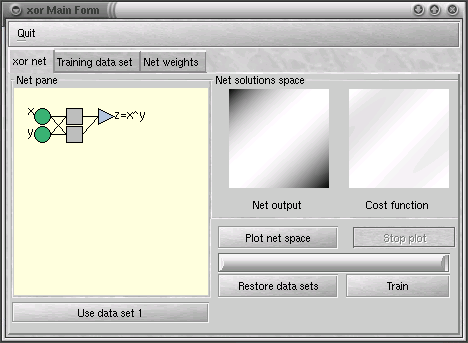

- This simple application made using vdkann shows some examples in which the associated error surfaces are populated by spurious local minima which lead into learning sub-optimal solutions.

- A source code tarball of the shown examples can be dowloaded at:

- Examples are extracted from:"Optimal learning in artificial neural networks: a theoretical view" M.Bianchini and M.Gori - Dipartimento di Sistemi e Informatica - University of Florence.

http://sourceforge.net/project/filelist.php?group_id=4017

|

Fig.1 Fig.1 |

Example 1: standard learning pattern

In this example we consider a multilayer

feed-forward network for the XOR boolean function with the cost function

and the standard learning environment. (see the first four rows of the

above table).

You can see on "Net output" pane how the net separates the solution space which is not linearly separable in three subspaces. Output was computed on a matrix R[0,1] x [0,1] of the 2-dimensional plane with p(0,0) at pane left bottom corner. A white pixel signifies a value of 0, whereas a black one a value of 1. You can see that many pixels have a gray scale showing values in between. Learning patterns are clearly non-linearly separable so cost function is not guaranteed to be local-minima free. Cost function was computed as Hamming distance between net output and z target (assumed to be |x - y|), pixel colors are as above. Error surface shows that in spite of the non linear-separability the net seems to be enough well trained against target with a wide global minimum. |

|

Example 2: extended learning pattern

In this example we consider the same netwotk and cost function

as in example 1 but with different learning patterns. Pattern E as in the

table above was added to training set.

"Net output" pane shows that the solutions space is not clearly divided in three regions as in example 1 but in several spurious solution planes. Pending on initial weights the cost function shows that the error can be stuck in points where the cost is far to be null, some local minima are evident as well. The presence of these local minima are related to the simmetry of the learning environment and are not necessarly an indication of poor training however. Training can be rapidly enhanced using more than two hidden units (left to be an exercise for the reader). |

Copyright Mario Motta 2000